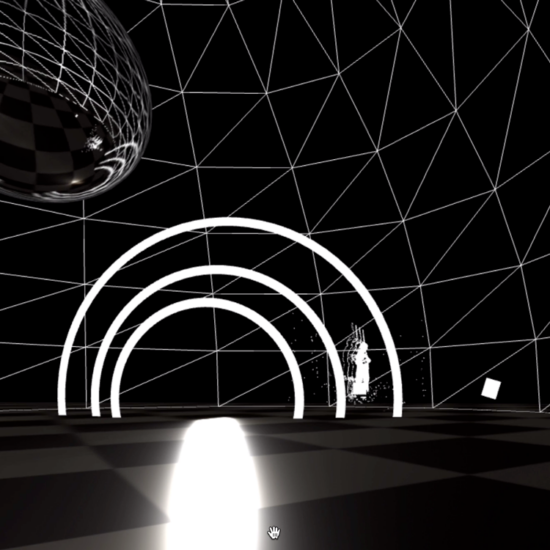

We present the implementation of a multi-user metaverse environment for telematic

concert performances. It uses low-latency, real-time WebRTC-based peer-to-peer (P2P) broadcasting for audiovisual volumetric rendering with binaural spatial audio and 3D video streaming from depth cameras. The system is realized using a web-based framework to implement a platform-independent online multi-user environment compatible with the WebXR standard. The performers’, as well as the audience’s audio, can be streamed and shared in the environment and is rendered binaurally. In addition, the performers’ depth image is streamed from 3D cameras and rendered in the environment as a three-dimensional point cloud. The result is a real-time 6-DoF audiovisual volumetric performance in a virtual 3D world that provides an immersive concert experience. It can be experienced with WebXR compatible devices, whether screen-based on the computer, mobile or on VR and AR systems. The implemented system was developed and evaluated with a first-of-this-kind virtual performance, “The Entanglement.”

The multi-user metaverse environment was tested and further developed during a residency at the Institute for Computer Music and Sound Technology at Zurich University of the Arts.