In telematic performances, both the actors – performers and audience – and the mediating media – cameras, microphones, screens, loudspeaker arrays – are usually used statically; the perspective of sight and hearing thus appears fixed and can often be compared to a central perspective. This facilitates the control and precise handling of sonic, linguistic and visual information that migrates between the connected spaces in the telematic network. At the same time, however, such procedures reduce spatial atmospheres or the experience of embodiment and thus the potential for immersion in both local and remote space. The interplay of dislocated spaces and their unique atmospheres (no matter if authentic or staged) in temporal synchronisation is, however, precisely a feature that distinguishes telematics from other media forms and makes it unique.

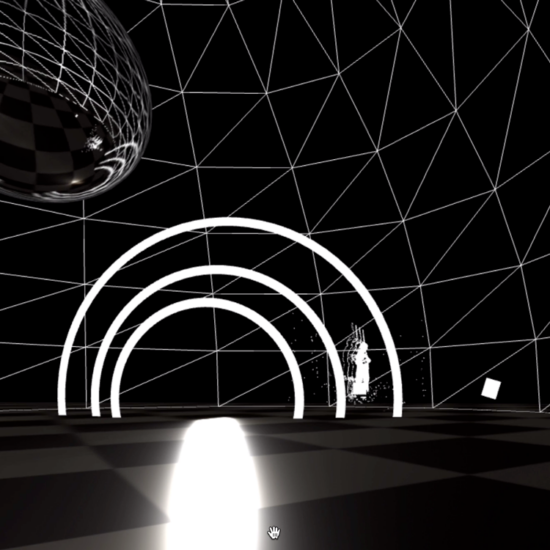

In our approach to telemersive performances, we therefore combine approaches of ‘spatial augmented reality’ (SAR) with the idea of ‘spatial metacomposition’, which are applied to the telematic context using advanced technologies. Contrary to ‘virtual reality’ (VR), SAR does not replace the real-world environment with a simulated one, but alters one’s ongoing perception of this environment. Contrary to ‘augmented reality’ (AR), SAR detaches the display technology (as head mounted displays) from the user and integrates it into the environment. The (remote) sensing technologies available today, on the other hand, make it possible to set up interactive audiovisual spaces. The related term ‘spatial metacomposition’ describes a form of spatial design in which space is used to arrange audiovisual elements rather than being treated merely as an environment; we extend this term towards ‘telemersive metadramaturgies’: “Succinctly put, [telemersive metadramaturgies] generate [audiovisual] output as a function of a performers position in space.” (Robert Jarvis and Darrin Verhagen, “Composing in Spacetime with Rainbows: Spatial Metacomposition in the Real World”, in: Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures, 2020).

The presentation provides an insight into the development of technological setups and related narrative procedures in which these considerations are applied in a telemersive and interactive 1 : 1 encounter between an audience member and a performer (fed from a remote space). Two specific use cases are described, that – on a content level – overwrite science fiction as climate fiction narratives (Stanislaw Lem’s Solaris and Arkadi and Boris Strugatzki’s Roadside Picnic). On the visual level, remote sensing technologies such as photogrammetry, 360° and volumetric cameras are used, partly mobile, which are streamed into the audience space via a tablet with which the audience guest can navigate freely through the remote space, using the tablet as a mobile window or virtual camera; it can be compared to a first-person perspective in a video game. Motion tracking systems allow for accurate positioning of both performer and audience member, as well as camera and tablet, enabling multi-perspective communication. The tracking data is also used to make the remote space acoustically tangible: 3D as well as conventional microphones installed there are streamed into the audience space via open back headphones as well as via a loudspeaker array. The superimposition of binaural as well as ambisonic rendering makes it possible to acoustically experience spatial movements in mutual dependence, while at the same time enabling sonic zoom effects that oscillate between distance and intimacy. In the presentation, a number of technological innovations in the ongoing research are instroduced that enable such telemersive metadramaturgies.